Important Google Update

As website owners and SEO professionals, we rely heavily on Google’s documentation to understand and optimize our websites for optimal indexing. Even the smallest typo or error in the documentation can have significant consequences, potentially leading to the inadvertent blocking of legitimate Google crawlers. Recently, Google identified and rectified a typo in their crawler documentation, ensuring that website owners can accurately whitelist and block specific crawlers. In this article, we will explore the implications of this typo and how it can affect website indexing.

The Importance of the Google Inspection Tool

One of the key areas where the typo occurred is in the documentation related to the Google Inspection Tool. This tool plays a crucial role in website indexing and provides valuable functionality for website owners. Let’s take a closer look at the two main functions of the Google Inspection Tool.

1. URL Inspection Functionality in Search Console

The URL inspection functionality in Google’s Search Console allows users to check whether a webpage is indexed and request indexing if necessary. When a user performs these actions, Google’s system responds with the Google Inspection Tool crawler. This crawler provides several important functions, including:

- Status of a URL in the Google index: Website owners can quickly determine whether a specific URL is indexed by Google.

- Inspection of a live URL: The tool allows users to inspect a live URL, providing valuable insights into how Google sees the page.

- Request indexing for a URL: If a webpage has been updated or is not yet indexed, website owners can request Google to re-index the page using this tool.

- View rendered version of the page: The Google Inspection Tool also provides a rendered version of the page, allowing website owners to see how Google renders their content.

- View loaded resources, JavaScript output, and other information: This functionality helps website owners troubleshoot any issues related to resources, JavaScript, or other elements on the page.

- Troubleshoot a missing page: If a webpage is missing from Google’s index, the Google Inspection Tool can assist in identifying potential issues and troubleshooting them.

- Learn your canonical page: Website owners can verify whether the canonical page is correctly identified by Google using this tool.

2. Rich Results Test

The second function of the Google Inspection Tool is the rich results test. This test is used to check the validity of structured data and determine if a webpage qualifies for enhanced search results, also known as rich results. When website owners utilize this test, a specific crawler is triggered to fetch the webpage and analyze the structured data.

The Problem with Crawler User Agent Typo

While a typo may seem like a minor issue, it can have significant implications for websites that rely on whitelisting specific robots or blocking crawlers through the use of robots.txt or robots meta directives. Let’s explore a few scenarios where the crawler user agent typo can cause problems.

1. Paywalled Websites

Many websites with premium content or services implement paywalls to restrict access to certain sections of their site. To ensure that only paying customers can access the content, these websites often whitelist specific user agents, including Google’s crawlers. However, with an incorrect user agent identification, the paywall may inadvertently block the legitimate Google Inspection Tool crawler, preventing it from indexing the premium content.

2. Content Management Systems (CMS)

Content management systems often use robots.txt or robots meta directives to control crawler access to specific pages. In some cases, CMS platforms remove links to certain pages, such as user registration pages, user profiles, or search functions, to prevent bots from indexing them. If the user agent identification is incorrect, CMS platforms may fail to block the Google Inspection Tool crawler, leading to the indexing of pages that should remain hidden from search engines.

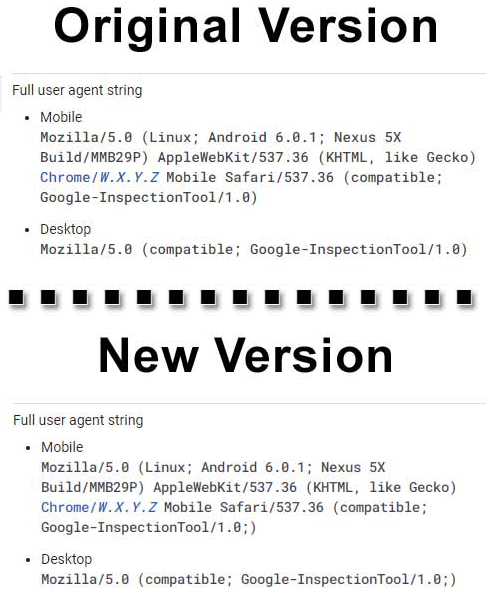

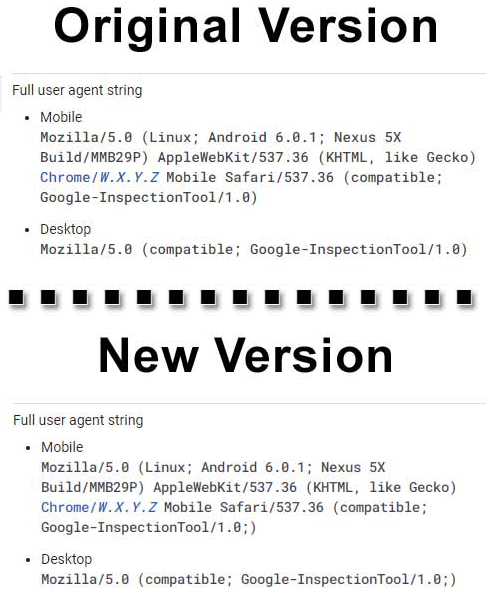

Identifying the User Agent Typo

The user agent typo that Google recently fixed was not easy to spot. It required a keen eye to identify the difference. Take a look at the before and after screenshots below:

Before:

After:

The original user agent string read as follows:

Mozilla/5.0 (compatible; Google-InspectionTool/1.0)

After the fix, the updated user agent string includes a crucial addition:

Mozilla/5.0 (compatible; Google-InspectionTool/1.0 ; )

It is crucial for website owners and SEO professionals to update relevant robots.txt files, meta robots directives, or CMS code to reflect this change. Failure to do so could lead to the unintended blocking or indexing of web pages.

See first source: Search Engine Journal

FAQ

What is the Google Inspection Tool, and why is it important for website owners and SEO professionals?

The Google Inspection Tool is a vital feature within Google’s Search Console, serving two main purposes:

- URL Inspection Functionality in Search Console: This function allows users to check URL indexing status and request indexing. It provides insights into indexing status, page inspection, rendering, resource analysis, troubleshooting, and canonical page verification.

- Rich Results Test: This checks structured data for eligibility for rich results in search. It uses a specific crawler to fetch and analyze structured data.

What is the significance of the typo in the Google Inspection Tool’s crawler user agent?

The typo in the crawler user agent affects crawler management. It can disrupt user agent-based access control, impacting scenarios such as paywalled websites and content management systems (CMS).

How does the typo affect paywalled websites?

Paywalled sites whitelist user agents like Google’s to control access to premium content. The typo may mistakenly block the legitimate Google Inspection Tool crawler, affecting indexing and visibility.

What are the implications for CMS platforms using user agent identification?

CMS systems often use user agent-based rules. The typo could fail to block the Google Inspection Tool crawler, inadvertently indexing pages meant to remain hidden.

How can website owners address the typo in their configurations?

To fix the typo and prevent issues:

- Check robots.txt, meta robots, or CMS settings.

- Update the user agent string to “Mozilla/5.0 (compatible; Google-InspectionTool/1.0 ; )”.

- Adjust rules accordingly.

- Monitor and test indexing behavior for compliance.

Featured Image Credit: Agnieszka Boeske; Unsplash – Thank you!

Colin Hughes, a passionate wordsmith and digital raconteur. He ghostwrites for numerous websites that include travel, culture, and lifestyle content. When not traveling for work, he loves to spend his time at home with his husband and two border collies, Reggie and Tuesday.